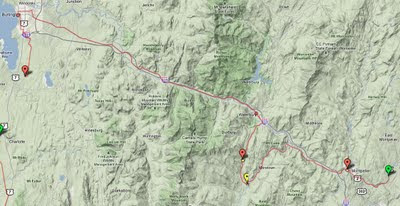

A 7 day trip of about 620k (took a day off to visit family) ending with a free open air concert by Offenbach!

After 4 years and no week long bike trips, I finally took a vacation. I biked from Montreal down the Chambly canal to the Richelieu river and around Lake Champlain. The easiest and most beautiful section of the lake (Alburg Springs to Burlington 78k) was substituted by a hilly segment after visiting family (East Montpelier to Burlington 87k).

Lessons Learned

* Lakes are rivers which couldn't drain because they are surrounded by hills; roads surrounding lakes are NOT flat.

* Biking on a canal or a river is much, much flatter than biking around a lake.

* Biking around Lake Champlain Difficulty: medium to hard.

* Biking on a canal difficulty: easy.

* Recommendation for biking around Lake Champlain: have a support vehicle which drives around your camping gear, don't haul it up the mountains unless that's your goal (biking uphill with weight).

Bottom Line

* Before this trip I was heavily biased towards flat bike trips with minimal wind. Now I realize I actually like biking in hills, even up hills, because there's much less wind. However, my next fully loaded bike trip will be on the

Erie canal :)

Total Feet Climbed: 20,108 ft !!!!

Max Grade Uphill: 29 % !!!

Min Elevation: -119 ft (in Montreal)

Max Elevation: 1088 ft (in Moretown VT)

The View was spectacular:

Total Flat Tires: none :)

Total Mechanical Problems: none :)

Total Distance: 620+ km

Total Time: 37:38:40 hours

Average Moving Speed: 15.52 km/h

Max Speed: 57.6 km/h (in Moretown VT when I climbed up into the Mountains on my 3rd day by mistake)

Recorded: Sat Jun 25 - Friday July 1 2011

Activity type: Cycling

GPS tracked by

My Tracks on Android.

Maps used:

Montreal -> Saint Jean sur Richelieu (La Montérégie Pistes Cyclables 2011)

Saint Jean sur Richelieu -> Alburg Springs (Revivez l'aventure de Champlain à vélo)

East Montpelier -> Burlington (Google Maps)

Burlington -> Shelburne (Burlington Walk Bike Council bike map 3rd edition)

Shelburne VT -> Crown Point NY (Lake Champlain Bikeways Map New Edition)

Crown Point NY -> Ausable Chasm (Lake Champlain Region Mapguide of Essex County NY)

Ausable Chasm -> Saint Jean sur Richelieu (Revivez l'aventure de Champlain à vélo)

The Map I wanted to use:

Google biking directions cached on my Android...

The trip in pictures

Click on the picture to see a slide show of the pictures of the trip.

The trip in Elevation Statistics

Here are the track by track stats generated using My Tracks. It's an Open Source GPS tracking app for Android which gives you complete access to your stats and will generates Google maps and Google earth data for you... Hopefully the door to a future with readily accessible maps and directions for biking anywhere!

Montreal to Chambly: flat, mostly beautiful bike paths through the woods

Created by

My Tracks on Android.

Total Distance: 41.92 km (26.0 mi)

Moving Time: 2:23:09

Average Moving Speed: 17.57 km/h (10.9 mi/h)

Max Speed: 33.35 km/h (20.7 mi/h)

Recorded: Sat Jun 25 12:13:48 EDT 2011

Montreal to Alburg Springs VT: flat, low traffic roads through fields and small villages from Saint Jean sur Richelieu to Alburg

Created by

My Tracks on Android.

Total Distance: 96.21 km (59.8 mi)

Total Time: 7:52:20

Moving Time: 5:48:30

Average Moving Speed: 16.56 km/h (10.3 mi/h)

Max Speed: 32.85 km/h (20.4 mi/h)

Recorded: Sun Jun 26 09:48:38 EDT 2011

Montpelier to Moretown: good sized shoulder, low traffic, mostly paved except on Three Mile Bridge Road quite a few joggers and cyclists out training

In this section I got lost. Instead of turning right when I got to Middlesex, I turned left onto Route 100B (thinking it was Route 2). After a bit I realized the river was flowing the wrong way (the Winooski was flowing with me, the Mad River was flowing against me...) and that I had climbed up into the mountains. I opened the MyTracks app, and saw that the shape of my trajectory wasn't what I expected.

When I got to the intersection of 100B and 100 I had to decide, do I backtrack down where I came, or follow the sign that said "Stowe"... knowing full well that having the name of a popular ski resort town on the sign in the direction I was headed probably meant climbing...I decided to go "see what I could see" by climbing another mountain. It turned out I was right, the worst climbing was yet to come on Route 100 (the section of 20-24k in the graph below):)

Created by

My Tracks on Android.

Total Distance: 27.01 km (16.8 mi)

Total Time: 1:55:59

Moving Time: 1:38:22

Average Moving Speed: 16.47 km/h (10.2 mi/h)

Max Speed: 47.70 km/h (29.6 mi/h)

Min Elevation: 117 m (383 ft)

Max Elevation: 323 m (1059 ft)

Elevation Gain: 432 m (1418 ft)

Max Grade: 6 %

Min Grade: -6 %

Recorded: Tue Jun 28 08:36:28 EDT 2011

Activity type: cycling

Moretown to Burlington: what goes up must come down...

When I figured I had reached the top I started a new track. Here we can see the profile of coming off the mountain, and then following Route 2 into Burlington. Just before Burlington there was a nasty hill (which my mom called "French Hill", I don't know what its actually called) where I could start to feel the wind coming off the lake, straight at me. It was a painful traffic filled ride into Burlington. The map which I got from Local Motion would have helped to avoid most of the traffic... I got to Burlington at around 3:00pm, went to Local Motion and headed south to get as far as I could that day.

Created by

My Tracks on Android.

Total Distance: 53.97 km (33.5 mi)

Total Time: 4:28:18

Moving Time: 2:58:09

Average Moving Speed: 18.18 km/h (11.3 mi/h)

Max Speed: 57.60 km/h (35.8 mi/h)

Min Elevation: 5 m (18 ft)

Max Elevation: 332 m (1088 ft)

Elevation Gain: 459 m (1506 ft)

Max Grade: 4 %

Min Grade: -9 %

Recorded: Tue Jun 28 10:32:42 EDT 2011

Burlington to Charlotte: good sized shoulder lots of hills...

The hills around Burlington were particularly frustrating, amplified by my being tired due to my unexpected detour that morning into the hills of Central Vermont and a wind from the south at around 20k/hr effectively putting my speed at only 10k an hour :( You can easily see the profile of the hills here in this graph.

Created by

My Tracks on Android.

Total Distance: 14.53 km (9.0 mi)

Total Time: 1:38:48

Moving Time: 1:22:47

Average Moving Speed: 10.53 km/h (6.5 mi/h)

Max Speed: 49.50 km/h (30.8 mi/h)

Min Elevation: 1 m (3 ft)

Max Elevation: 88 m (288 ft)

Elevation Gain: 268 m (880 ft)

Recorded: Tue Jun 28 15:12:42 EDT 2011

Charlotte to Button Bay: lots of hills...

Created by

My Tracks on Android.

Total Distance: 29.79 km (18.5 mi)

Total Time: 2:37:11

Moving Time: 1:58:19

Average Moving Speed: 15.11 km/h (9.4 mi/h)

Max Speed: 44.10 km/h (27.4 mi/h)

Elevation Gain: 380 m (1247 ft)

Max Grade: 29 %

Min Grade: -9 %

Recorded: Wed Jun 29 06:19:06 EDT 2011

Button Bay to Port Henry: flat and beautiful scenery

The Bridge at Crown Point was taken down about a year and a half ago, and replaced with a Ferry. So I even got to go on a ferry ride :) As you can see from the graph below, there weren't a lot of painful hills. They just started on the climb into to Port Henry. Route 9 is about the only way to go north on the New York side, some sections of the pavement are actually full of waves, not due to being covered in flood water, but due to waves of trucks crossing over it. The nice lady at the welcome center in Crown Point suggested that I gun it when there was low visibility. The cars and trucks were very nice, there wasn't too much traffic so they moved to the other side of the road when passing me. Although it could have been a bad section to ride on I found it was fine, maybe just because I was happy to have the wind at my back (my average speed was back up to 15k/hr).

Created by

My Tracks on Android.

Total Distance: 32.69 km (20.3 mi)

Total Time: 3:32:43

Moving Time: 2:10:28

Average Moving Speed: 15.03 km/h (9.3 mi/h)

Max Speed: 40.70 km/h (25.3 mi/h)

Recorded: Wed Jun 29 11:31:28 EDT 2011

Port Henry to Willisboro: Hills of hell part 1

In this stretch I was very very happy for my rear view mirror. The road was smooth and I could take the entire lane to go down the hills (I had some pretty steep decent and I didn't want to melt my brakes).

I also realized why my Lake Champlain Bikeways Map had the entire New York side north of Whitehall green: it's some sort of nature preserve. What this means is that the land is too mountainous for anyone to have built any sizable farms, so there were so few residents that it's basically a state park. That's also why when you drive through upstate New York on 87, all you see are mountains and no towns. The cutest town was Westport and about the only place to buy groceries was at the Deli just south of Willisboro. The lady at the Welcome Center in Crown Point recommended camping at Noblewood Park. It was a pretty simple park, and didn't open until July 1st... so I was 3 days early.

Created by

My Tracks on Android.

Total Distance: 40.67 km (25.3 mi)

Total Time: 3:26:49

Moving Time: 2:33:56

Average Moving Speed: 15.85 km/h (9.9 mi/h)

Max Speed: 55.02 km/h (34.2 mi/h)

Min Elevation: -4 m (-12 ft)

Max Elevation: 159 m (522 ft)

Elevation Gain: 653 m (2143 ft)

Max Grade: 5 %

Min Grade: -13 %

Recorded: Wed Jun 29 15:34:39 EDT 2011

Activity type: cycling

Willisboro to Ausable Chasm: Hills of hell part 2

I did some leisurely hiking on Thursday Morning. I didn't have the strength to shift gears and still maintain momentum to accommodate for the roller coaster up hill shapes as can be seen in the graph below. The first bump is probably Rattlesnake mountain, the second and third bumps are on Highlands Road to Port Douglas. That road turned out to be the most beautiful part of my trip. It started off as a dirt road and became pavement after a little while. There were probably 2 cars the entire way, mostly just residents since the road basically lead from the middle of nowhere into the non-town of Port Douglas.

Created by

My Tracks on Android.

Total Distance: 25.95 km (16.1 mi)

Total Time: 3:11:08

Moving Time: 2:00:07

Average Moving Speed: 12.96 km/h (8.1 mi/h)

Max Speed: 53.10 km/h (33.0 mi/h)

Min Elevation: -3 m (-10 ft)

Max Elevation: 183 m (600 ft)

Elevation Gain: 607 m (1992 ft)

Max Grade: 7 %

Min Grade: -13 %

Recorded: Thu Jun 30 09:10:06 EDT 2011

Ausable Chasm to Saint Jean sur Richelieu: one loooong afternoon ending with an Offenbach Concert in the park!!!

After eating lunch at the Ausable Chasm Cafe and using their Wifi the rest was downhill. I had no trouble getting to Platsburg. Relaxed for an hour or two on the beach, and then biked all the way to Saint Jean sur Richelieu. I didn't think I could make it but I managed to pull into town just as the last light was fading and all my fruit was gone. I parked by bike just infront of the entrance for the

Ofenbach free open air concert, had 2 beers and 2 hotdogs to celebrate!

Created by

My Tracks on Android.

Total Distance: 101.67 km (63.2 mi)

Total Time: 8:05:18

Moving Time: 7:35:34

Average Moving Speed: 13.39 km/h (8.3 mi/h)

Max Speed: 54.00 km/h (33.6 mi/h)

Min Elevation: 0 m (-1 ft)

Max Elevation: 85 m (279 ft)

Elevation Gain: 659 m (2162 ft)

Max Grade: 9 %

Min Grade: -8 %

Recorded: Thu Jun 30 13:30:36 EDT 2011

(For some reason my elevation stats are cut off, but suffice it to say, it was flat..)